Every time we type something into ChatGPT, Gemini, Midjourney, or any other generative AI tool, it’s more than just text. We are, in fact, crafting a prompt. Whether for a bedtime story, an engaging Instagram caption, or help with a code snippet, the words we enter are more than casual input. They’re instructions that shape everything the AI tool creates or generates in return.

A prompt may look like a simple question or command, but it’s actually the spark that triggers a deep and complex sequence of operations inside the model. Think of it like pressing the power button on a laptop or computer. While the action seems simple, it initiates a chain of background operations: drawing power, activating the processor, lighting up the display, enabling the trackpad, and more. Similarly, a prompt kicks off the AI model’s internal mechanisms to produce a relevant and coherent output.

In this article, we’ll pull back the curtain on how generative AI tools work, explore what prompts really are, and see what happens behind the scenes when an AI model receives the input. By understanding this intricate process, you’ll gain a clearer perspective on communicating more effectively and powerfully with artificial intelligence.

What Is a Prompt, Really?

In simple terms, a prompt is a question or instruction that we give to an AI model. It tells the system what we want, whether writing a blog post, generating an image, or fixing a bug in a code snippet. The way you phrase the prompt matters the most. A clear and detailed prompt tends to give precise results, whereas a vague or unclear one often leads to unsatisfying outcomes.

In the early days of AI, prompts were rule-based commands. Users had to enter exact inputs, often in machine-specific syntax, to get the desired output. There was little room for flexibility or natural expression. But today, thanks to advances in natural language processing (NLP), we’ve shifted from rigid machine language to natural, human language. AI tools can now understand the nuances of our language and the way we casually write or talk.

Now, the key challenge has evolved: it’s no longer just about how to speak to AI but about how well we communicate our intent when giving prompts.

Key elements of a prompt

A well-structured prompt helps the model understand the context and intent to tailor its response effectively. While not every prompt needs to include all of these elements, understanding their role can significantly improve the quality of our interactions with AI.

- Instruction/Task: This is the core of the prompt and the primary action you want the AI to perform. For example, “Generate a short story about a futuristic city,” “Summarize this research paper,” or “Write Python code to sort a list of numbers.”

- Context: This provides background information or relevant details that help the AI understand the request more precisely. For a summary, the text to be summarized is the context. To generate a product description, the product’s features and target audience serve as the context.

- Constraints: These are specific limitations, rules, or requirements for the output. This could include a word count (e.g., “under 200 words”), a specific tone (e.g., “write in a humorous tone” or “maintain a formal academic style”), and inclusion or exclusion of certain topics.

- Format: This defines the desired structure or style of the AI’s output. We might want a bulleted list, a multi-paragraph essay, a JSON object, a dialogue script, or an image rendered in a particular artistic style.

Each element contributes to the clarity in which the AI can interpret your intent. The more detailed and well-considered your prompt, the more accurate and creative the result will be.

What Happens Inside the Model When You Prompt It?

With a clear understanding of what exactly a prompt is and its key components, let’s see what happens in the background when an AI model receives the prompt.

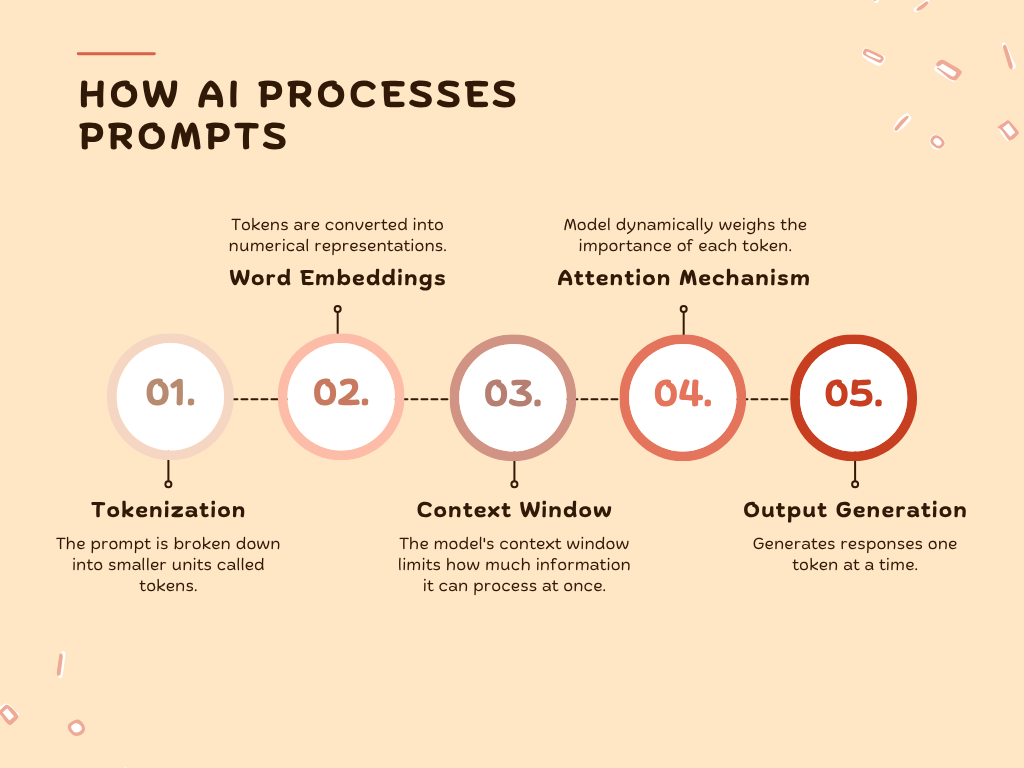

When you enter a prompt into an LLM (Large Language Model) like ChatGPT or Gemini, it doesn’t just read the sentence in plain English. It breaks it down, processes it mathematically, and generates a word-by-word response. In general, there are five stages in which every prompt is processed.

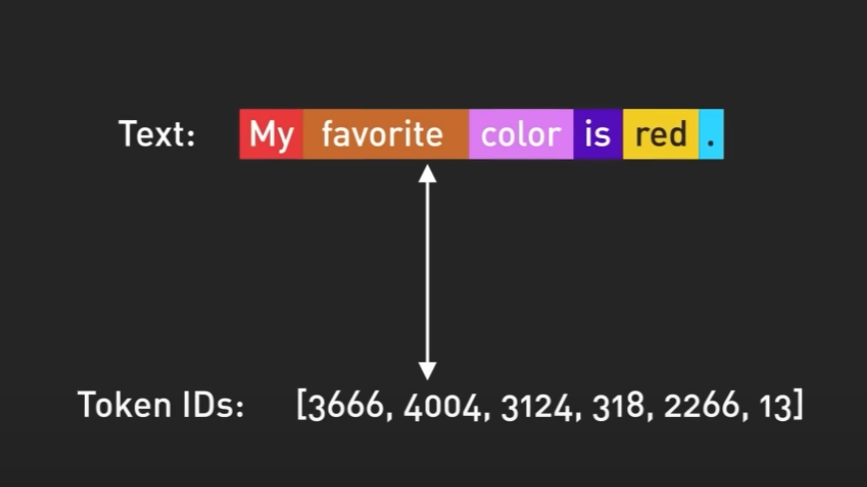

(1) Tokenization

The prompts, as we type, are not directly processed by the AI. Instead, they are broken down into smaller units called tokens. A token can be a whole word ( cat), part of a word (“un-” or “-ing”), a punctuation mark, or even a single character. For example, the sentence “My favorite color is red.” might be split into the five sets of token IDs, and the word “unbelievable” might be tokenized into “un,” “believe,” and “able.” These tokens are the fundamental building blocks that the model actually works with, not the original user input.

(2) Word Embeddings (Vector Embeddings)

Once tokenized, each token is converted into a numerical representation known as a word embedding or vector embedding. These embeddings help the model extract the meaning and context of words from the prompt. Think of it like a map where related words, like “cat” and “kitten,” are closer together, and unrelated words, like “carbohydrates” and “carburetor,” are farther apart. Though different AI models may use different embedding techniques, the goal is the same: to represent the meaning of words through high-dimensional numerical vectors.

(3) Context Window

Each model has a fixed limit to how many tokens it can consider at once, known as its context window. This is the model’s short-term or working memory. For example, GPT-4 can process up to 128,000 tokens at a time. If your prompt, along with previous conversation history, exceeds this limit, the model will forget the oldest parts of the input. This context window defines how much information the model can use when generating a response.

(4) Attention Mechanism

One of the key features of transformer-based models is the attention mechanism. It allows the model to dynamically weigh the importance of each token when generating output. For example, in the prompt “Summarize the key points of the report,” the model will prioritize tokens like “summarize” and “key points” over less meaningful tokens like “the.” The attention mechanism helps the model stay focused on what’s important in the prompt.

(5) Output Generation

Finally, the model begins generating its response. Based on its deep understanding of the tokenized, embedded, and contextually weighted input, the AI begins to generate its response. It doesn’t just pull an answer from memory, it predicts the next token one step at a time, using probabilities. For example, after seeing the phrase “Once upon a”, the model might assign a high probability to “time”, but also consider other possible options like “dream” or “night” based on learned patterns. This process is called autoregression. They predict one token at a time, and each predicted token influences the next. This allows the model to construct a coherent and contextually relevant response.

Types of Prompts and Why They Matter

Now that we’ve seen what happens inside an AI model let’s explore the different types of prompts and how they influence the AI model’s responses. The way we structure and phrase the prompt significantly affects how the model behaves. The inputs (prompts) shape the initial numerical representations (embeddings) and guide the attention mechanisms that weigh various input parts.

As the saying goes in the AI community, “You get out what you put in.” This couldn’t be truer for prompts.

Zero-Shot Prompting

This is the most basic form of prompting, where we provide the AI with instructions. It works well when the task is clear and the model has already been trained on similar examples.

“Explain what an internet browser is in simple terms.”

One-Shot Prompting

Here, we include a single example within the prompt to demonstrate the desired format or tone and ask the model to replicate it. The example acts as an in-context reference for the AI model.

“Here’s an example of a simple tech explanation: A website is like a digital book with many pages, accessed online. Now, explain what an internet browser is using a similar, single-sentence analogy.”

Few-Shot Prompting

As the name suggests, this involves giving a few examples to guide the AI’s output. Few-shot prompting helps the model generalize better, especially in creative or nuanced tasks.

“Here are a few simple explanations for internet terms:

Example 1: Website: A digital book with many pages.

Example 2: Search Engine: A digital librarian that helps you find websites.

Example 3: Email: A digital letter you send and receive.

Now, explain what an internet browser is, following a similarly simple, analogy-based format.”

Chain-of-Thought Prompting

This technique explicitly asks the AI to show its step-by-step reasoning before providing a final answer. By asking for intermediate steps, this method reduces errors and improves coherence in complex tasks.

“If you were explaining ‘what an internet browser is’ to someone who is completely new to technology, what thought process would you follow to simplify it? Outline your steps for explaining it clearly, then provide the actual explanation.”

Negative Prompting

In negative prompting, we explicitly instruct the model to exclude certain elements, styles, or information from its response. It helps the model avoid unwanted details and produce more focused outputs.

“Explain how an internet browser works, but avoid using complex terms and technical jargon.”

Roleplay Prompting

In this type, we instruct the AI to adopt a specific persona or role. This shapes the tone, language, and expertise level of the response.

“Imagine you are a friendly IT support specialist helping a non-tech-savvy person. Explain what an internet browser is in very simple and straightforward terms.”

Each prompt type engages the model’s internal mechanisms in a different way. Even subtle changes, like adjusting the format, adding context, or defining a tone, can dramatically alter the result. When we introduce examples, constraints, or a role, we are sending signals to the model’s attention layers. These cues activate different parts of its knowledge base and generate responses that are more aligned with our specific needs.

Is Prompt Engineering a Necessary Skill?

With the rise of generative AI, a new term has emerged: prompt engineering. The art of crafting effective prompts to get the best results from AI models. But is this a must-have skill, or merely a temporary phase in our evolving interaction with machines?

Right now, LLM prompting is one of the most trending and essential skills in the AI landscape. With well-phrased input, we can generate detailed essays, error-free code, image descriptions, or marketing copies.

At the same time, natural user interfaces (UIs) and function calling are changing the landscape. Instead of typing prompts, we might click buttons, fill out forms, or speak naturally while the AI converts those actions into optimized prompts behind the scenes. Some AI tools can play the role of prompt writers, helping us to craft effective prompts. Platforms like PromptHub and Originality.ai are great examples of it. There are even marketplaces like PromptBase where we can buy pre-made prompts for specific tasks.

This raises a fascinating question: Will prompts eventually disappear? Maybe. In many AI-powered products, we might never see the prompt, just the result. While prompt engineering may not always remain in the spotlight, its core principles, clarity, intention, and effective communication, will remain essential as AI continues to integrate deeper into our lives.

Prompting Forward: AI’s New Language

Prompts aren’t just lines of text. They’re a new form of communication. In a world increasingly shaped by AI, knowing how to write a good prompt is becoming a new kind of digital literacy, much like coding or typing once was. And prompts aren’t going away. They’re evolving. Today, we write them. Tomorrow, we might speak them. Eventually, we may not even consciously perceive them. Yet, the underlying skill behind effective prompting will continue to shape how we interact with AI systems.

So, the next time you open a chat window or launch a generative tool, resist the urge to type anything. Take a pause, think, and prompt with purpose. Because you’re not just feeding a machine, You’re starting a conversation with intelligence.

This article was contributed to the Scribe of AI blog by Mehavannen MP.

At Scribe of AI, we spend day in and day out creating content to push traffic to your AI company’s website and educate your audience on all things AI. This is a space for our writers to have a little creative freedom and show-off their personalities. If you would like to see what we do during our 9 to 5, please check out our services.