A couple of years ago, AI in software development mostly meant autocomplete. Tools like GitHub Copilot and Codeium could predict the next line of code or suggest a snippet. Helpful, but limited. They made typing faster, not shipping faster.

That’s changing. Developers are moving from relying on basic autocomplete features to adopting powerful AI coding tools and agents that act across the full development lifecycle. The difference is clear: an assistant advises; an agent carries out the task.

Today, a developer can point to a bug and say, “Fix that.” The AI can edit the code, run the tests, and open a pull request. Hours of work shrink to minutes, often with fewer errors.

The economic shift is just as significant. The AI agents market is projected to hit $103.6 billion by 2032, showing how quickly agents are becoming teammates in modern software development.

This article explores what AI agents are, why they matter, how they fit into the development workflow, who’s building them, and how to choose the right one for your needs.

What Is an AI Agent and Why Does It Matter?

An AI agent is more than a code suggestion tool. It is software that can take a high-level request, determine the necessary steps, and then execute them. Instead of stopping at “Here is a possible line of code,” it follows through until the task is complete.

Consider testing as an example. In many teams, adding or updating tests is tedious but necessary work. An agent can scan the codebase, identify functions without coverage, generate test cases, run them against the system, and prepare the results for review. The developer stays in control, but the effort shifts from manual setup to oversight.

This has become possible because today’s AI models can do more than generate text. They can reason about problems, call APIs, run commands, and integrate with tools such as GitHub, CI/CD pipelines, and cloud platforms. This combination of reasoning and action turns them from passive assistants into active participants in the workflow.

The result is a shift in how teams use their time. Work that once involved long cycles of editing, testing, and fixing can now move forward in a single pass. Reviews arrive sooner, errors surface earlier, and developers are free to focus on design decisions and larger technical challenges.

How AI Agents Work

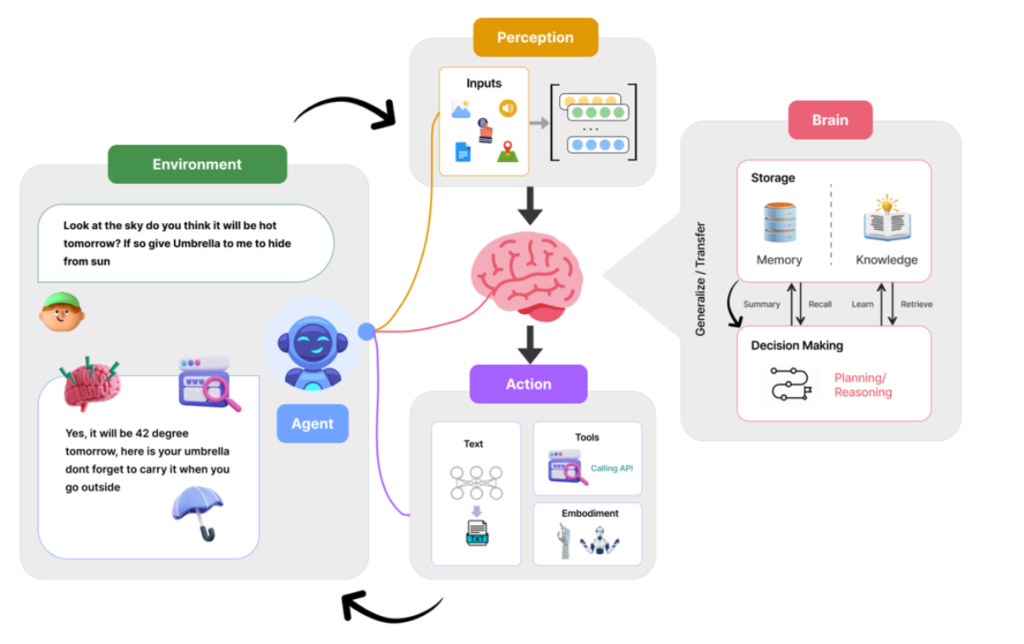

What makes AI agents different from earlier tools is not just that they can generate code, but that they can carry out entire workflows from start to finish. At their core, agents combine three capabilities:

- Understanding: They interpret a developer’s high-level request, whether it’s “add tests for this function” or “fix that bug.”

- Reasoning: Instead of offering a single suggestion, they break the request into steps, identifying missing coverage, generating code, running checks, or creating deployment scripts.

- Action: Finally, they execute those steps by editing files, calling APIs, running commands, or opening a pull request.

Under the hood, this is powered by large language models connected to external tools. The model handles reasoning, while integrations with GitHub, CI/CD pipelines, testing frameworks, and cloud platforms allow the agent to act.

A simple example illustrates the difference. With an autocomplete tool, you might get a suggestion for how to fix a bug, but then you still need to edit the file, rerun tests, and create a pull request yourself. An agent, on the other hand, can edit the file automatically, run the test suite to confirm the fix, and open a pull request ready for review.

Best AI Agents and Coding Tools Developers Use Today

AI agents are no longer limited to writing small pieces of code. They are starting to support the entire journey from idea to production. At each stage of development, agents are taking on routine work so teams can move faster and focus on higher-level problems.

Let us look at some of the main areas where agents are already making a difference.

Coding Agents (in Your Editor)

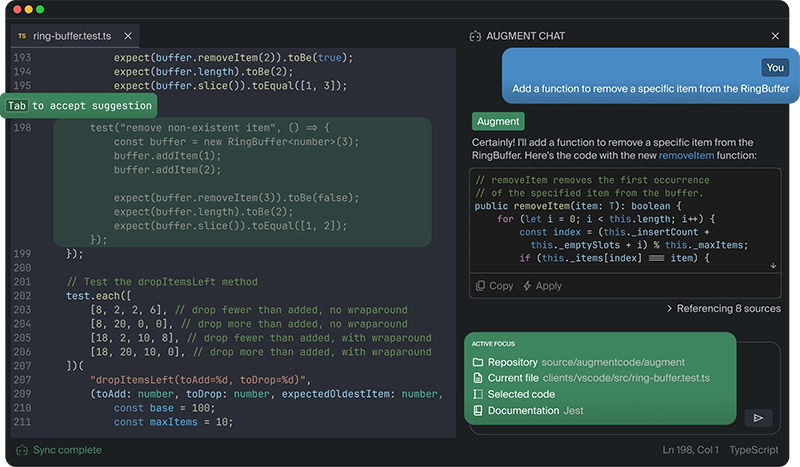

The editor is where developers spend most of their time, and it is also where AI agents have become the most capable. They now go beyond autocomplete, taking on larger tasks such as refactoring code, generating tests, and even preparing pull requests. Each tool approaches this in a different way, shaped by the problems it was designed to solve.

Here are some of the leading options.

- GitHub Copilot (Agent mode): Copilot started as a simple autocomplete tool, but in agent mode, it has grown into a true collaborator. It can follow instructions, edit files, write tests, and prepare pull requests automatically. Because it is tightly integrated with GitHub, adoption is seamless for teams that already manage their projects on the platform. They also have an in-house AI app builder called GitHub Spark.

- Sourcegraph Cody: Cody builds on Sourcegraph’s foundation as a search and navigation tool for large codebases. It specializes in understanding massive, complex systems with millions of lines of code. This makes it particularly valuable for enterprise teams maintaining legacy applications or dealing with intricate dependencies.

- Replit Agents: Replit’s mission has always been to make coding more accessible, starting from the browser. Its agents carry that forward by helping developers prototype, test, and deploy applications in one place. This approach removes infrastructure headaches, which is why Replit has become popular with startups, educators, and indie developers.

- Amazon Q Developer: Amazon’s offering goes beyond coding support to integrate directly with AWS services. It can suggest cloud resources, fix configuration issues, and guide deployment alongside writing code. For teams already invested in AWS, it feels like having a built-in DevOps teammate.

- Tabnine Agents: Founded in 2019, Tabnine earned its reputation by focusing on privacy and compliance. Its on-premise deployment options mean enterprises in finance, healthcare, or government can use AI support without exposing sensitive code to the cloud. This makes it one of the most trusted tools for regulated industries.

- Cursor: Unlike most tools that extend existing editors, Cursor is an IDE built specifically for AI collaboration. Features like its bug-fixing bot and conversational “vibe coding” mode turn the development process into more of a dialogue with the agent. It appeals to teams who want to experiment with new, more interactive ways of working.

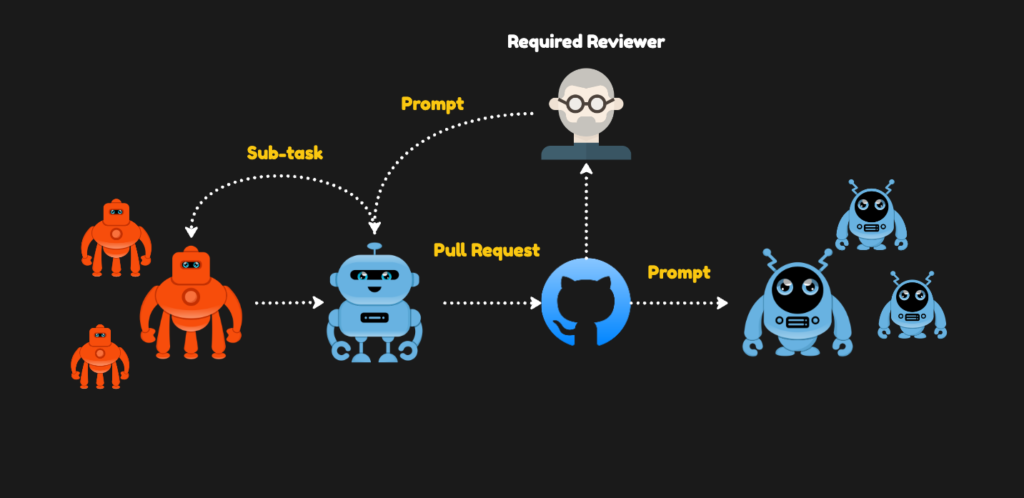

Pull Request and Code Review Agents

After the code is written, it usually goes through a pull request before being merged. This step is essential for quality but often slows teams down, especially when reviews pile up or small changes wait days for approval. AI agents are now helping speed up this stage while keeping standards high.

Here are some of the agents making a difference in this space:

- Bito: Bito focuses on speed and automation. It can generate pull requests automatically and review existing code, which helps teams avoid the delays that come from waiting for someone to draft or check a PR manually. By taking care of the repetitive aspects of the process, Bito ensures that developers spend less time on administrative overhead and more time on building features.

- Tabnine Code Review Agent: Built with enterprise needs in mind, Tabnine’s review agent emphasizes strict consistency. It enforces coding standards, compliance requirements, and style guidelines across the codebase. This makes it especially valuable for large organizations where dozens or even hundreds of developers are contributing code, and maintaining uniform practices is critical for long-term maintainability.

- CodeRabbit and Axolo: These smaller but practical tools bring automation to teams that don’t necessarily need heavy enterprise solutions. They highlight issues, suggest improvements, and help close pull requests faster without sacrificing quality. Their strength lies in making reviews more accessible, giving small and mid-sized teams the benefits of automated review without the complexity of setting up large-scale systems.

Frontend and Design-to-Code Agents

Turning a design into working code has always been a slow handoff. Designers create mockups, and developers translate them into components and layouts. This step often takes days and can delay product development. AI agents are now helping to close this gap by turning designs into usable code much more quickly.

Here are some of the leading agents in this area:

- Replit Full-Stack Deploy: Replit is best known for its accessible, browser-based coding environment, and its agents extend that philosophy into frontend development. With Replit’s full-stack deploy feature, developers can create frontend prototypes and connect them directly to backend logic in the same workspace. This means an early design idea can be turned into a running application with minimal setup — perfect for startups, educators, and indie developers who need speed over complex pipelines.

- Uizard: Uizard approaches the design-to-code problem from a prototyping perspective. It allows users to upload wireframes or even rough sketches and then automatically converts them into ready-to-use code. This is especially powerful in the early stages of product design, where speed matters more than polish. By shortening the feedback loop between design and engineering, Uizard helps teams validate ideas quickly before investing heavily in development.

- Anima: Anima focuses on bridging the gap between professional design tools and frontend code. It can take Figma or Sketch files and transform them into responsive React code that’s production-ready. This reduces the amount of repetitive work for frontend developers, while also ensuring that what appears in the design is faithfully reflected in the implementation. For teams that need consistency between design and code, Anima helps eliminate the common “design drift” problem.

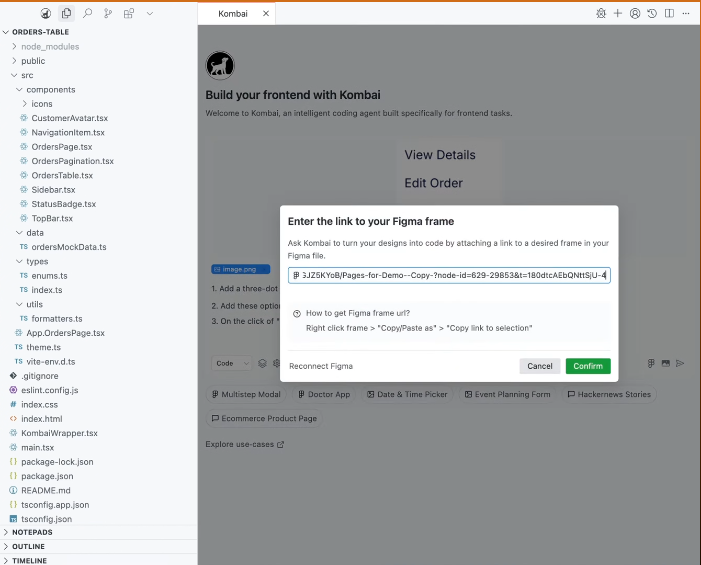

- Kombai: Kombai positions itself as a specialist for complex designs. While many tools struggle to preserve responsiveness and detail when converting from Figma, Kombai’s strength lies in producing clean React or HTML/CSS code that closely mirrors the original design. This makes it a strong choice for teams that want to move quickly from high-fidelity mockups to production without sacrificing accuracy or layout integrity.

Debugging and Testing Agents

Bugs and missing tests are two of the biggest reasons development slows down. Finding a problem, fixing it, and making sure the change does not break something else can take hours. Writing and maintaining test suites can be just as time-consuming, often feeling like busywork. AI agents are stepping in to automate both of these tasks and give developers back valuable time.

Here are some of the agents leading the way:

- Cursor Bugbot: Built directly into the Cursor IDE, Bugbot acts as an always-on debugging companion. It can detect issues, propose fixes, and even apply them automatically when the developer approves. By reducing the manual effort required to chase down errors, Bugbot helps developers maintain focus and momentum rather than getting bogged down in repetitive debugging cycles.

- Google Jules (experimental): Jules is one of Google’s early experiments in agent-driven debugging. It not only repairs code but also integrates with workflows by opening pull requests containing the fixes. While still in development, it highlights the potential for debugging agents to plug seamlessly into team collaboration, handling everything from identifying the issue to preparing a solution ready for review.

- Diffblue Cover: Diffblue has carved out a niche in automated testing, with Cover specializing in generating unit tests for Java applications. This is particularly valuable for enterprises with massive or legacy Java codebases where writing and maintaining tests manually is unrealistic. By automating test creation, Diffblue Cover helps improve coverage and reliability while saving teams from weeks of repetitive effort.

- Mabl and Testim: These tools focus on end-to-end testing, where the challenge isn’t just creating tests but keeping them up to date as the codebase evolves. Mabl and Testim use AI to automatically maintain and update test suites whenever the underlying code changes. This reduces the overhead of test upkeep, making it easier for teams to ensure quality without slowing down development.

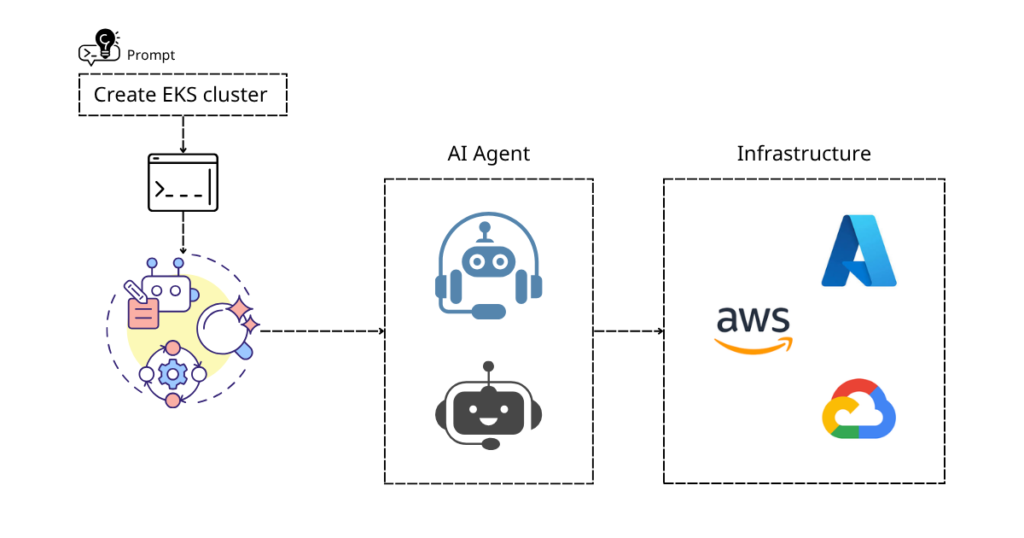

DevOps and Infrastructure Agents

Getting code from a repository into production is one of the most complex parts of software development. It often requires setting up servers, writing infrastructure scripts, and managing CI/CD pipelines. Mistakes here can lead to downtime, security risks, or failed deployments. AI agents are beginning to simplify this step by turning plain instructions into working infrastructure code and deployment actions.

Here are some of the agents making this process easier:

- Pulumi AI and Terraform AI: These agents are transforming how developers interact with infrastructure. Instead of writing detailed configuration files, teams can describe what they need in natural language, and the agent translates that into scripts ready for deployment. This approach lowers the barrier to entry for developers who may not be experts in infrastructure-as-code tools, while also reducing the risk of human error in complex configuration management.

- Amazon Q Developer: Amazon’s agent goes a step beyond traditional coding support by connecting development directly to AWS environments. It suggests commands, helps resolve configuration issues, and streamlines deployment steps. For teams already committed to AWS, Q acts like an embedded DevOps engineer, ensuring that applications move smoothly from code to cloud without unnecessary friction.

- Azure Copilot and Google Cloud Assistants: Both Microsoft and Google have introduced agents tailored to their respective cloud ecosystems. These tools help teams through setup, deployment, and security tasks, guiding them with guardrails that reduce the chances of costly errors. By embedding AI agents into the cloud environments themselves, they make infrastructure management more accessible and help teams take advantage of platform-specific best practices without having to become experts in every detail.

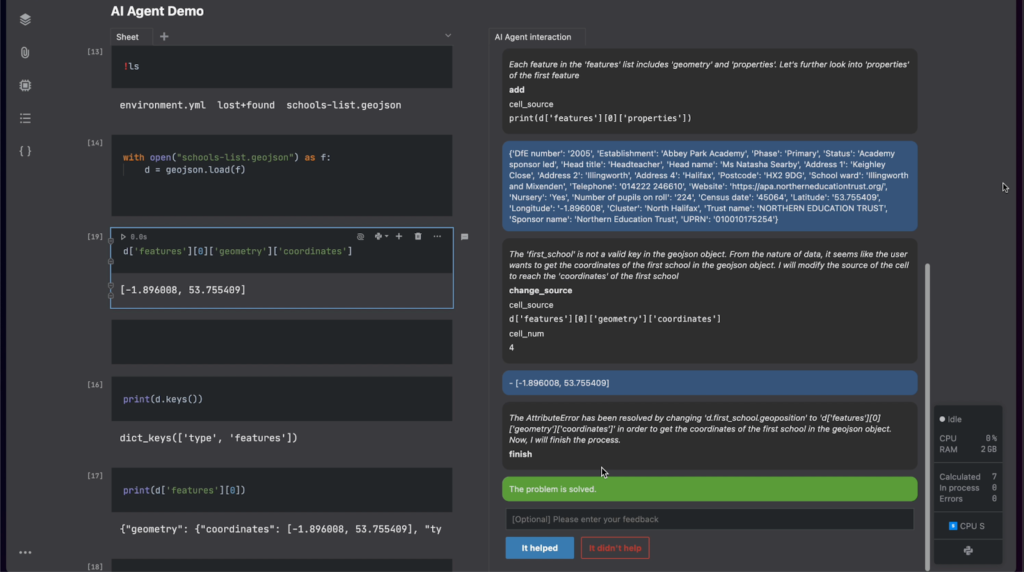

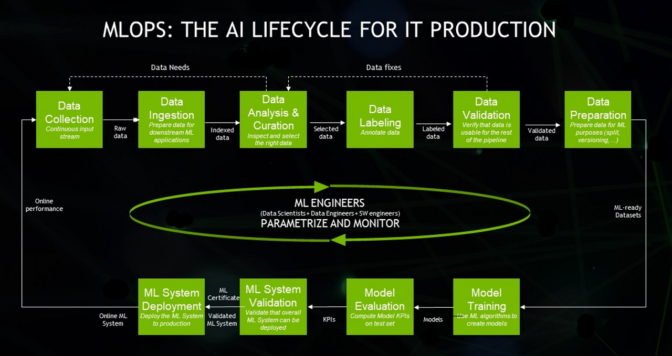

AI and ML Agents (MLOps)

For teams working with machine learning, building and deploying models is often more complex than writing application code. Training, scaling, and optimizing models usually require specialized knowledge and heavy infrastructure. AI agents are reducing this overhead and making it easier to move models from research into production.

Here are some of the key players in this space:

- Hugging Face: Hugging Face has become the go-to hub for open-source AI, and its agent-like tools make deployment much easier. Developers can fine-tune models, convert them for different environments, and publish them with minimal setup. Once published, a model can be served as an API that’s ready for integration, turning cutting-edge research into a usable product in a matter of hours instead of weeks.

- Weights & Biases: Often called the “GitHub for machine learning experiments,” Weights & Biases helps teams track training runs, compare results, and monitor deployments. By acting as an organizational layer, it keeps workflows transparent and ensures that team members are aligned on results. For teams experimenting heavily, it’s like having an agent that manages the complexity of experimentation so researchers can focus on improving models.

- Replicate: Replicate specializes in simplifying the infrastructure side of AI. It allows developers to run models as APIs without needing to manage GPUs or cloud setup. This makes it especially appealing for smaller teams that want to integrate AI features into their applications but don’t have the resources to maintain dedicated ML infrastructure. Testing and iteration become much faster when hardware concerns are abstracted away.

- OctoML: OctoML focuses squarely on optimization. Its agent-like tools help teams prepare models for production environments by improving performance and reducing costs at scale. For organizations deploying large numbers of models, even small efficiency gains can translate into significant savings. OctoML’s value lies in bridging the gap between high-performance research models and cost-effective, production-ready systems.

AI Agents for Programming Market Snapshot

AI agents for programming and coding are moving from hype into real adoption. A May 2025 survey found that 88% of business functions plan to increase their AI-related budgets in the next year, with agentic AI named as the main driver. For many organizations, agents are no longer side experiments but core investments in productivity and efficiency.

The market is forming along clear lines. Large platforms such as GitHub, AWS, and Microsoft are building agents directly into their ecosystems, making adoption natural for teams already using those tools. Enterprise-focused providers like Tabnine are earning trust with privacy-first, on-premise deployments that meet strict compliance needs. Startups such as Cursor and Replit are moving faster, offering lightweight tools that appeal to smaller teams and independent developers.

Adoption patterns vary. Enterprises move carefully, prioritizing security and compliance. Smaller teams move quickly, focusing on speed, cost, and the ability to iterate. Both groups see value, but their reasons for adoption are different.

Looking ahead, the market is unlikely to be dominated by a single tool. Instead, it will consist of multiple ecosystems shaped by company size, infrastructure choices, and developer needs.

Factors to Choose the Right Agent for You

Here are some practical factors to consider when choosing between different AI coding tools:

- Workflow fit: Identify the biggest bottleneck. If most delays happen in pull requests, a code review agent will save the most time. If bug fixing consumes every sprint, a debugging or testing agent offers higher impact. Matching the tool to the pain point matters more than adding AI everywhere.

- Integration: Agents should connect naturally to your environment. A team using GitHub every day will get the most value from Copilot Agent, while AWS-heavy teams may lean toward Amazon Q Developer. Smooth integration avoids extra overhead.

- Scale and complexity: Small teams benefit from fast, lightweight tools like Cursor or Codeium. Large teams managing millions of lines of code may need agents built for context, such as Sourcegraph Cody. Scale determines how well an agent can keep up.

- Privacy and compliance: Enterprises must weigh data handling carefully. Options like Tabnine, which support on-premise deployment, reduce risk without giving up AI-powered help.

Conclusion

AI agents for programming are changing how software is built by taking on real tasks. They can fix bugs, run tests, review pull requests, and even help with deployment. This reduces repetitive work and shortens the path from idea to production.

For developers, this means more time to focus on design and problem-solving. For teams, it means faster cycles and more reliable results. The best way to start is simple: pick one workflow, try an agent, and see what difference it makes. Whether you begin with lightweight AI coding tools, experiment with voice AI agents for developers, or adopt enterprise-grade solutions, the impact is clear.

Early adopters who choose the best AI coding agents for software developers will set the pace, and their ways of working will shape the future of the industry. The first step may be small, but it can open the door to significant changes in how we code, test, and ship software with the help of modern AI agents for programming, coding, and development.

This article was contributed to the Scribe of AI blog by Aakash R.

At Scribe of AI, we spend day in and day out creating content to push traffic to your AI company’s website and educate your audience on all things AI. This is a space for our writers to have a little creative freedom and show off their personalities. If you would like to see what we do during our 9 to 5, please check out our services.