Ever wondered how your phone recognizes your face but not your sibling’s, or how Google Photos groups your vacation pictures automatically? While it seems like magic, it’s actually AI image recognition quietly crunching pixels in the background.

AI, in simple terms, is a branch of computer science that allows machines to perform tasks that usually require human intelligence, such as recognizing patterns, making decisions, or learning from experience. When talking about photos, AI can see images and extract information from them, even though it doesn’t experience them the way we do.

How, you ask? Think about the last time you took a picture, a quick mirror selfie, or that plate of pasta you couldn’t resist sharing. For us, it’s a memory. For AI, it’s just millions of tiny dots called pixels, each carrying fragments of information. Unlike humans, AI doesn’t see pasta or a smiling face; it sees edges and patterns.

By analyzing these patterns, AI turns raw pixels into smart data. This is a type of information that helps machines understand what’s in an image, make sense of it, and even act on it. This capability powers everything from personalized recommendations to autonomous cars spotting road signs.

In this blog, we’ll explore how AI image recognition transforms simple images into actionable data, dive into real-world applications, and look ahead to where this technology is headed.

How AI Image Recognition Works

When we look at a photo, our brains instantly recognize what’s in front of us. It happens because it has years of learning and understanding built in.

For a machine, however, a photo appears quite different. It’s not a scene but a grid of millions of tiny pixels. On its own, a pixel means nothing. So how does AI see images and learn to find meaning in this sea of pixels? The answer is an interesting branch of AI called computer vision.

Computer vision is a field of AI that enables machines to interpret and understand visual information. It mimics the way human vision works but does so using numbers. The goal is to help machines extract information from images or videos just like we do, but faster and even more accurately.

Let’s dive deeper into how computer vision breaks down this process.

- Feature Extraction: Before AI can understand an image, it first needs to pick out what’s important. This step is called feature extraction. During this process, AI scans through pixels to identify these defining elements.

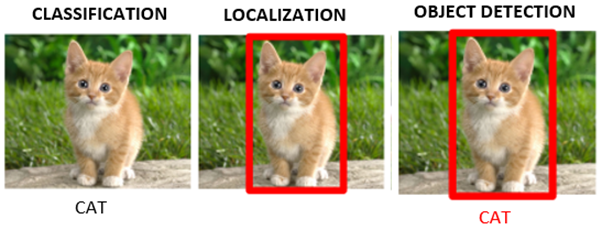

- Image Classification: After extracting features, the next step is image classification. This is where the AI determines what the entire image represents. For example, after analyzing the features of an object, it may classify them as a dog or a cat.

- Object Detection: Sometimes, an image contains multiple objects. Object detection helps in identifying each object within it. It does this by drawing bounding boxes around each object and labeling them. This step enables AI to identify what is present and its location.

All these steps are made possible by what we call AI models, also known as computer vision models. It is a mathematical system trained to recognize patterns, make predictions, and improve over time. This model learns by processing thousands, or even millions, of labeled examples.

After training, these models can interpret new images with greater accuracy, relying on data and layered algorithms rather than human perception.

Data Labeling and Datasets: The Foundation of Learning

Well, now we know how AI sees and identifies patterns in images, the next question is: how does it learn what those patterns mean?

The explanation comes down to data labeling. In simple terms, data labeling means tagging images with correct information. These tags help the AI connect what it sees (pixels and patterns) with what it understands (objects and context).

All these labeled images come together in massive datasets, which act like textbooks for the model. For instance, ImageNet includes millions of labeled images across thousands of categories. Similarly, COCO (Common Objects in Context) involves everyday scenes featuring people, furniture, and animals.

The quality of these datasets directly impacts the model’s performance. A model trained on accurate images learns to recognize objects more reliably in real-world settings. Once that groundwork is laid, the model is ready for its next step: learning from data.

How AI Learns: Model Training Made Simple

By now, we’ve seen how AI detects patterns in images, but how does it actually learn what those patterns mean?

AI models learn from labeled images, pictures tagged with descriptions such as flowers or buildings. These labels act as a guide, helping the model understand what each image represents.

The learning happens through a process called supervised learning. The model processes sets of images, makes predictions, and adjusts itself accordingly. It’s like a child learning to recognize animals, except this kid never gets tired and won’t ask for snacks.

A core architecture behind AI image recognition is the convolutional neural network (CNN). CNNs are especially good at spotting patterns in images, such as edges, textures, and shapes.

Through this process, AI turns a jumble of pixels into meaningful information, allowing models to see the world in a way that’s useful for everything from facial recognition to self-driving cars.

How AI Measures Its Progress: Accuracy and Evaluation Metrics

While it may appear that training the model is the end of the process, it’s certainly not. The model also requires a method to evaluate its performance. Just as students receive report cards, AI models are also graded using a set of evaluation metrics.

Evaluation metrics are numbers that tell us how accurate, reliable, and consistent the model’s predictions are. They help answer questions like: Did the model identify the right object? Or did it confuse one thing for another?

The most common metrics are precision and recall. Precision measures how often the model’s predictions are correct. For example, if a model labels 100 photos as cats and 90 of them are truly cats, the precision is 90%. Recall, on the other hand, measures how many of the actual cats the model managed to find. So, if there were 120 cat photos in total and it caught 90, the recall is 75%.

These metrics help researchers spot weaknesses. Understanding these scores ensures the model is genuinely learning to see better. Once the model learns and proves it can see correctly, the next step is putting that intelligence to work, turning it into something useful.

Smart Data Behind the Scenes

Once an AI model learns to recognize objects and patterns in photos, the next step is turning that understanding into something useful, which we call smart data.

When a model processes an image, it doesn’t see just pixels. It breaks them down into feature vectors, numerical representations of visual elements, such as color gradients, shapes, and textures. These vectors are compared with trained categories to generate labels such as sunset, beach, or portraits. This makes the photo library searchable.

For example, typing sunset in Google Photos immediately shows all the sunset shots. That’s smart data at work. By converting patterns and labels into structured information, AI allows machines to find and organize images in ways humans would find impossible. But it doesn’t stop there.

AI can also interpret the context of an image, helping suggest tags, organize albums, or highlight memories. In short, smart data is the bridge between raw pixels and real-world functionality. By the time a photo reaches the app or device, it has already been decoded and made ready to serve the needs.

Beyond Algorithms: What Makes Smart Data Possible

While algorithms make AI intelligent, there are other factors that define how well it works. Here are a few that matter just as much.

- Hardware: Powerful GPUs and specialized chips, such as TPUs, handle billions of calculations per second. This enables models to process high-resolution images, detect faces, and recognize objects in real-time.

- Data Quality: AI is only as good as the images it learns from. High-quality datasets help prevent bias and improve accuracy across various lighting conditions and environments.

- Ethics and Privacy: With AI’s growing ability to recognize faces and locations, responsible data handling, consent, and transparency are key to building public trust, especially as issues like AI doppelgangers come into focus.

- Energy Efficiency: Training large vision models can be energy-intensive. Optimizing model size and hardware usage helps reduce the carbon footprint while maintaining high performance.

These elements form the foundation that turns photos into meaningful and responsible data, which shows AI’s powers in seeing data wisely.

Real-World Applications of AI Image Recognition

From exploring the cosmos to analyzing wildlife, AI is solving real problems and creating opportunities we never imagined. Let’s explore some standout examples where AI is turning photos into meaningful insights.

Face Recognition and Identity Detection

Facial recognition is part of daily life, whether it’s unlocking our phones or helping Google Photos sort our pictures. Tools like Face ID and Google Photos rely on AI photo identifiers to map facial features, extract key points, and match them to known identities, all within seconds.

These systems interpret patterns, depth, and angles to ensure accuracy, even under varying lighting conditions or expressions. A great example is Changi Airport in Singapore. They use AI-driven biometric boarding, which allows passengers to move through security checks and boarding gates without presenting documents. The system cross-verifies faces with passport data, creating a smooth experience.

Analyzing Images in Wildlife Conservation

In wildlife research, camera traps have become an important part of monitoring animal populations. However, these cameras can capture tens of thousands of images in just a few weeks, and sorting through them manually can take months.

Computer vision is changing that. For example, in the Snapshot Serengeti project in Tanzania, researchers trained deep learning models on millions of camera-trap images to count species and identify them. They’re trained on the Snapshot Serengeti dataset, one of the largest publicly available wildlife image collections. This helps them detect animals with impressive precision.

Using Computer Vision in Astronomy

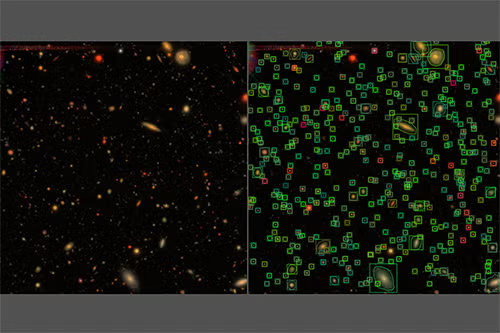

The universe is vast, and so is the data it produces. Modern telescopes capture billions of celestial images, far too many for humans to analyze manually. In space exploration, AI image recognition can help detect stars, galaxies, nebulae, and even faint or overlapping celestial bodies that might otherwise go unnoticed.

By identifying subtle patterns in light intensity, shape, and color, these models reveal phenomena invisible to the naked eye. Researchers at the University of Illinois, for instance, developed an AI system that can process telescope images with great accuracy. It helps scientists pinpoint new galaxies and classify celestial objects in a fraction of the time traditional methods would take.

Future of AI in Image Recognition

The story of AI and photos is just getting started. As technology evolves, we’re entering an era where AI photo recognition merges with multimodal AI, systems capable of understanding multiple types of input at once, such as images, text, and audio.

Google’s Gemini is one of the most powerful examples of this shift. Designed as a multimodal model from the ground up, Gemini can interpret and reason across images, text, video, and audio together. It can extract structured data from screenshots, summarize visual information, and even answer contextual questions.

We may also see AR glasses that bring photos to life, layering information or memories right into your view. AR (augmented reality) is about mixing digital elements with the real world, and glasses powered by vision AI could take this further.

One such example is Meta’s Project Orion, a prototype AR headset that overlays digital content directly onto the real world. With computer vision, these glasses could recognize patterns, recall memories, and provide context, turning ordinary photos into interactive experiences.

Conclusion

Photos may seem simple, but with the help of artificial intelligence image processing, they can be turned into smart data that helps solve real-world problems.

It allows every picture to hold more than what meets the eye. The photos can detect patterns, provide context, and turn images into immersive experiences.

From personal memories to scientific discoveries, photos are becoming a key factor that informs and guides our understanding of the world. For more ideas on how AI can boost your productivity in everyday life, check out AI for personal productivity.

This article was contributed to the Scribe of AI blog by Kalyani Burnwal.

At Scribe of AI, we spend day in and day out creating content to push traffic to your AI company’s website and educate your audience on all things AI. This is a space for our writers to have a little creative freedom and show off their personalities. If you would like to see what we do during our 9 to 5, please check out our services.